Laser Weld Defect Detection

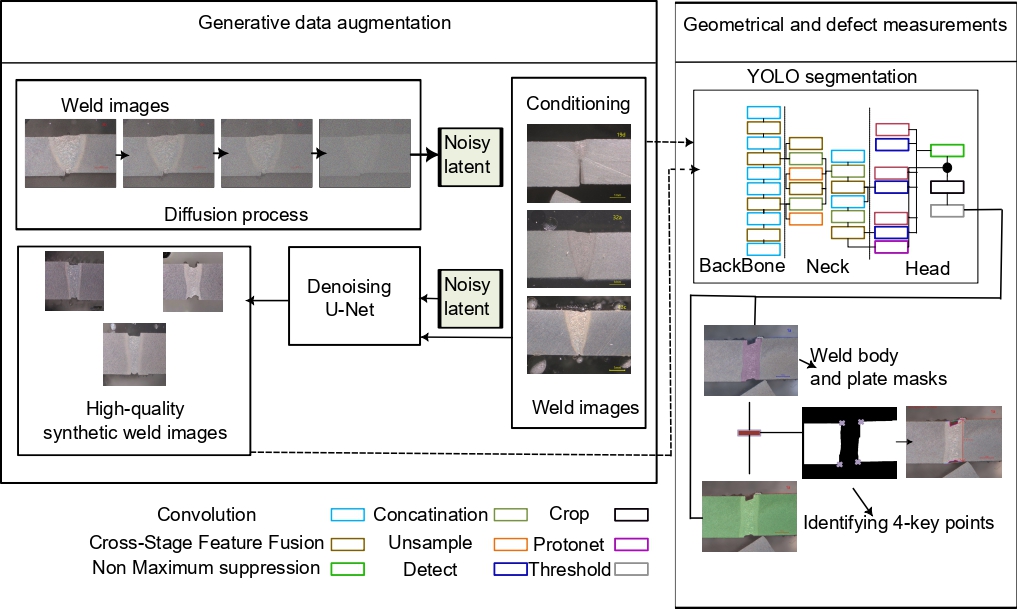

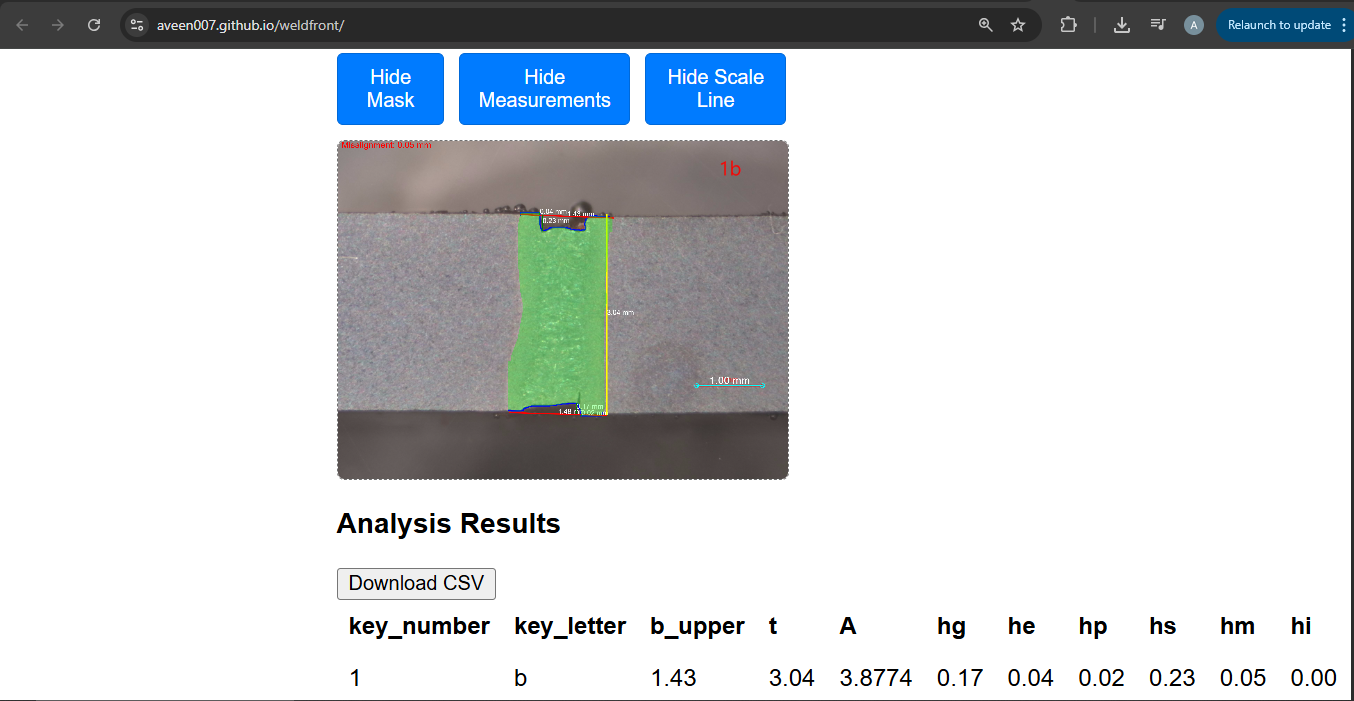

Automated quality control system using semantic segmentation and generative data augmentation for laser weld inspection.

This project addresses the critical need for automated quality control in laser welding processes through computer vision and machine learning. Traditional manual inspection of weld macrosections is slow, subjective, and error-prone, making automation essential for modern manufacturing.

Project Overview

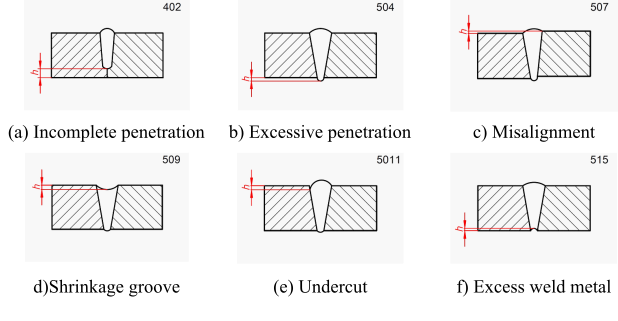

Laser-weld macrosection analysis is essential for assessing weld quality and detecting defects according to ISO 6520-1 standards. This research proposes an automated pipeline that integrates generative data augmentation with weld segmentation to overcome the limitations of manual inspection and data scarcity in industrial settings.

Technical Approach

Generative Data Augmentation

To address the scarcity of annotated industrial weld data, we employed Stable Diffusion to synthesize high-quality weld macrosection images. This approach generated up to 4,500 synthetic weld images, significantly expanding our training dataset while preserving crucial contour features that standard augmentations like flipping or cropping would distort.

Semantic Segmentation Architecture

We evaluated multiple state-of-the-art segmentation models for defect identification:

- DDRNet: Deep Dual-Resolution Network maintaining parallel high- and low-resolution feature streams

- BiSeNet: Bilateral Segmentation Network combining spatial and contextual paths

- YOLOv11: Adapted for segmentation with stable inference and strong robustness

Experimental Results

Our evaluation employed both standard segmentation metrics and domain-specific geometrical measurements compared against expert manual assessments:

| Model | mIoU | Agreement Accuracy |

|---|---|---|

| DDRNet | 95.46% | 88.62% |

| BiSeNet | 95.21% | 94.78% |

| YOLOv11 | 95.24% | 97.50% |

Key Findings:

- YOLOv11 achieved 97.5% agreement with expert measurements across 1,312 defects

- All models demonstrated high mIoU scores (>95%)

- The pipeline successfully identified critical defects including continuous undercut, shrinkage groove, excess weld metal, and linear misalignment

- Generated synthetic data maintained geometrical accuracy with <10% deviation from expert measurements

Implementation Details

Data Collection

- Material: 09G2S pipeline structural steel (3 × 150 × 450 mm)

- Camera positioned at multiple locations along weld (72mm, 152mm, 232mm, 312mm)

- 166 original weld macrosection images collected from manufacturing line

Measurement Parameters

- Weld width (b), depth (s), and heat-affected zone area (Aw)

- Defect quantification according to ISO 6520-1 standards

- Real-time monitoring and control capabilities

Research Impact

This work represents the first approach combining generative augmentation and segmentation for reliable, high-precision weld macrosection inspection. The pipeline enables:

- Automated Quality Control: Reduces manual inspection time and subjectivity

- High-Precision Measurements: Achieves expert-level accuracy in defect quantification

- Scalable Deployment: Suitable for integration into manufacturing production lines

- Standard Compliance: Adheres to ISO 6520-1 welding defect standards

Publications

This research has been presented at international conferences:

- GECCO 2025: Genetic and Evolutionary Computation Conference (ACM/SIGEVO)

- FLAMN 2025: Conference on Laser-Weld Applications

Code Repository: LaserWeldMonitor on GitHub

Technologies Used

- Computer Vision: YOLOv11, DDRNet, BiSeNet

- Generative AI: Stable Diffusion for data augmentation

- Deep Learning: PyTorch, semantic segmentation

- Industrial Standards: ISO 6520-1 compliance

- Programming: Python, OpenCV, image processing libraries

References

Mao, G.; Cao, T.; Li, Z.; and Dong, Y. 2025. Enhancing Shape Perception and Segmentation Consistency for Industrial Image Inspection. arXiv:2505.14718.

Pan, H.; Hong, Y.; Sun, W.; and Jia, Y. 2022. Deep Dual-Resolution Networks for Real-Time and Accurate Semantic Segmentation of Traffic Scenes. IEEE Transactions on Intelligent Transportation Systems.

Reiss, T.; Cohen, N.; Bergman, L.; and Hoshen, Y. 2021. PANDA: Adapting Pretrained Features for Anomaly Detection and Segmentation. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2805–2813.

Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; and Ommer, B. 2022. High-Resolution Image Synthesis with Latent Diffusion Models. arXiv:2112.10752.

Shorten, C.; and Khoshgoftaar, T. M. 2019. A survey on Image Data Augmentation for Deep Learning. Journal of Big Data, 6(1): 60.

Tran, T. A.; Lobov, A.; Kaasa, T. H.; Bjelland, M.; and Midling, O. T. 2021. CAD integrated automatic recognition of weld paths. The International Journal of Advanced Manufacturing Technology, 115(7): 2145–2159.

Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; and Sang, N. 2018. BiSeNet: Bilateral Segmentation Network for Real-time Semantic Segmentation. arXiv:1808.00897.